Nebius ($NBIS) is a bet on AI infrastructure’s awkward middle child

The former Yandex spinoff has $20 billion in contracts but trades at 100x earnings—and the market’s asking the wrong questions

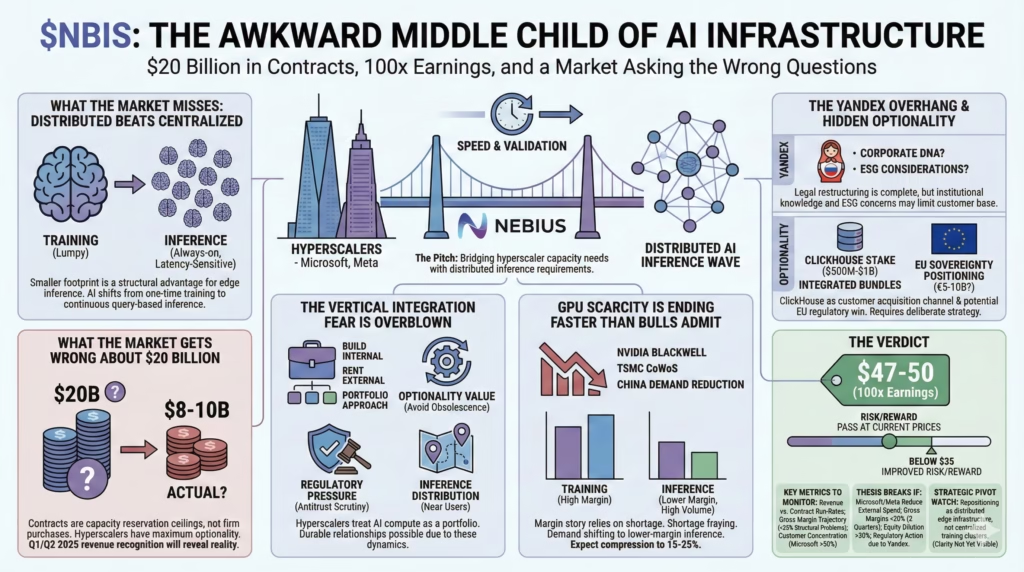

The pitch

Nebius sits at the intersection of two powerful forces: hyperscalers who need GPU capacity faster than they can build it, and an AI inference wave that favors distributed infrastructure over centralized mega-data-centers. The Microsoft and Meta contracts totaling $20B+ aren’t just revenue—they’re validation that even the best-capitalized companies on Earth prefer to rent than build when speed matters.

Here’s what the market misses. Nebius’s smaller footprint, typically viewed as a weakness, could become a structural advantage as AI shifts from lumpy training runs to always-on, latency-sensitive inference at the edge.

The friction

At 100x+ earnings, the stock prices in flawless execution on data center deployments that don’t yet exist, using contracts whose actual terms remain opaque. Customer concentration is extreme—if Microsoft represents 50%+ of future revenue, any strategic shift toward in-sourcing becomes an existential event. Not a competitive headwind. An existential event.

The GPU shortage narrative that justified premium pricing is already stale. Nvidia’s Blackwell ramp and expanding TSMC capacity suggest margin compression arrives before Nebius can prove its model works.

Time to dig into the details.

Why $20 billion in contracts might only mean $10 billion in revenue

Enterprise cloud contracts are notoriously squishy. Without disclosed terms, the Microsoft deal almost certainly represents a capacity reservation ceiling, not a firm purchase commitment. These structures give hyperscalers maximum optionality—they’ve locked in access to GPU hours they might need while retaining ability to scale down if internal builds accelerate or AI demand patterns shift.

The $17-19B headline number is likely a multi-year maximum that requires Nebius to successfully deploy data centers that take 12-18 months to build. The gap between announced contract value and recognized revenue could run 40-60% in a realistic scenario. A five-year $17B contract contingent on Nebius hitting deployment milestones, with flexible volume terms, might translate to $8-10B actual revenue. Still transformational—but half what headlines imply.

Revenue recognition in Q1/Q2 2025 versus implied run-rates will reveal whether these contracts have teeth or are primarily marketing vehicles.

Bulls point to these deals as validation. That’s partially correct. Microsoft and Meta wouldn’t sign capacity agreements with an unreliable partner. But treating announced values as near-certain revenue ignores how enterprise cloud procurement actually works. Watch for any “scope adjustments” buried in 10-Ks and customer concentration metrics that reveal true dependency levels.

The vertical integration fear is probably overblown

Consensus bearishness centers on a straightforward narrative: hyperscalers are using Nebius as bridge capacity while building internal GPU infrastructure, then will crush external providers once their own data centers come online.

This logic sounds compelling but misses how hyperscalers actually manage AI infrastructure.

Microsoft, Google, and Amazon are simultaneously building internal capacity and increasing external cloud purchases. They’re treating AI compute as a portfolio, not pursuing pure vertical integration. Three dynamics explain why:

First, optionality value. In a rapidly evolving technology landscape, hyperscalers benefit from not locking in massive internal capex that could become obsolete within GPU generations. Second, regulatory pressure—every hyperscaler faces antitrust scrutiny over vertical integration, and Microsoft especially has DOJ attention. Being seen to “support the ecosystem” has strategic value. This reminds me of the telecom equipment dynamics from the early 2000s, though that’s probably a story for another time. Third, inference distribution. As AI shifts toward always-on inference, compute needs to be geographically distributed near users, not concentrated in centralized mega-data-centers. Neoclouds with distributed footprints may be better positioned for this future than hyperscaler architectures designed for training.

The bear case assumes hyperscalers want to own all AI compute. The emerging reality is more nuanced. If vertical integration fears are overblown, Nebius’s customer relationships could prove more durable than currently priced—though this hardly makes the stock cheap at current multiples.

GPU scarcity is ending faster than bulls admit

The margin story depends on sustained GPU shortage. That narrative is already fraying.

Nvidia’s Blackwell ramp is aggressive, TSMC CoWoS capacity is expanding, and Chinese demand has been structurally reduced by export controls. By late 2025, signs of GPU surplus will likely emerge in certain market segments. More critically, demand composition is shifting in ways that hurt Nebius’s current model.

Training demand is lumpy and declining per model—GPT-5 training might require 100,000 H100 equivalents, but training happens once, then the model deploys. The next version might be more efficient. Inference demand, by contrast, is steady and growing exponentially. Every ChatGPT query, every Copilot suggestion requires inference compute that scales with user adoption, not model development cycles.

The margin structure differs completely. Training is high-margin, high-utilization, but lumpy. Inference is lower-margin per GPU-hour but higher volume and more predictable. Nebius’s current infrastructure is optimized for training economics.

Bulls projecting 2024 gross margins (~30%) into 2026 are likely wrong. Margin compression in the 15-25% range is plausible as GPU supply normalizes and demand shifts toward inference configurations that Nebius may not be optimized to serve.

The Yandex overhang nobody wants to discuss

The 2024 corporate restructuring is legally complete. But corporate DNA doesn’t change with legal filings.

Several underappreciated concerns persist. How much of Nebius’s technical team was originally Yandex personnel? Are there ongoing employment relationships, consulting arrangements, or knowledge-transfer dependencies with Russia-based engineers? This isn’t about sanctions compliance, which appears addressed—it’s about operational capability and institutional knowledge that can’t be replicated overnight.

Vendor relationships matter too. Data center infrastructure requires deep supplier partnerships. Were these rebuilt from scratch or inherited from Yandex arrangements that could create complications? And reputationally—in a world where ESG considerations affect enterprise procurement, some potential customers may avoid Nebius due to its Russian heritage regardless of current corporate structure. Irrational but real.

US-Russia relations remain volatile. Future escalation could trigger expanded sanctions affecting Nebius indirectly, or create procurement headwinds with US government-adjacent customers. The “clean spinoff” narrative allows markets to ignore residual complexity that could limit the addressable customer base or create unexpected execution friction.

The hidden optionality that actually matters

Nebius’s ClickHouse stake isn’t just a financial asset—it’s a potential customer acquisition channel that markets aren’t pricing.

ClickHouse, the open-source analytics database, is increasingly used for real-time AI/ML feature stores and inference logging. Companies running ClickHouse at scale—Uber, Spotify, Cloudflare—need to compute features in real-time for ML models, store inference results, and analyze AI application performance. Nebius could offer integrated “ClickHouse + GPU compute” bundles that create switching costs neither ClickHouse Cloud nor hyperscalers can match.

Customer acquisition cost for GPU cloud is extremely high. A built-in lead generation engine could be worth more than the ClickHouse stake’s book value. Based on comparable transactions in the analytics database space, the ClickHouse position likely represents $500M-$1B in value—meaningful downside protection if core infrastructure disappoints, but not transformational relative to Nebius’s current $10B+ market cap.

Additionally, Nebius’s Amsterdam headquarters creates optionality for European AI sovereignty positioning. EU regulations increasingly favor non-US cloud providers for sensitive workloads. Nebius could become the preferred GPU cloud for European enterprises and governments seeking AI capability without US dependency—a dynamic that could unlock €5-10B in contracts that hyperscalers can’t win on regulatory grounds. This requires deliberate strategic positioning not yet visible in company communications.

The bottom line

Look, Nebius is a genuine AI infrastructure play with real contracts and structural tailwinds. At $47-50 per share though—roughly 100x earnings—the stock prices in perfect execution on capacity that doesn’t yet exist, using contracts whose terms remain unclear, in a market where GPU scarcity is ending.

The bullish case requires believing Nebius can 10x revenue to $7-9B by end of 2026 while maintaining margins that face compression as supply normalizes and demand shifts toward inference. Pass at current prices. The risk/reward improves materially below $35, where contract execution risk is better compensated and the ClickHouse stake provides meaningful valuation floor support.

Key metrics to monitor quarterly: revenue recognition versus implied contract run-rates (are customers actually drawing on capacity?), gross margin trajectory (compression below 25% signals structural problem), and customer concentration disclosure (if Microsoft exceeds 50% of revenue, concentration risk dominates all other considerations). The data center deployment timeline is critical—any slippage beyond Q3 2025 for announced expansions indicates execution problems that compound quickly in a capital-intensive business.

Thesis breaks if: (1) Microsoft or Meta materially reduce external GPU cloud spending as internal capacity comes online, (2) gross margins compress below 20% for two consecutive quarters suggesting pricing power has evaporated, (3) equity dilution exceeds 30% to fund capex requirements, or (4) any regulatory action affecting the company’s ability to serve US customers due to Yandex heritage.

Watch for the inference pivot. If Nebius successfully repositions as distributed edge infrastructure rather than centralized training clusters… well, the competitive dynamics shift favorably. That strategic clarity isn’t yet visible.