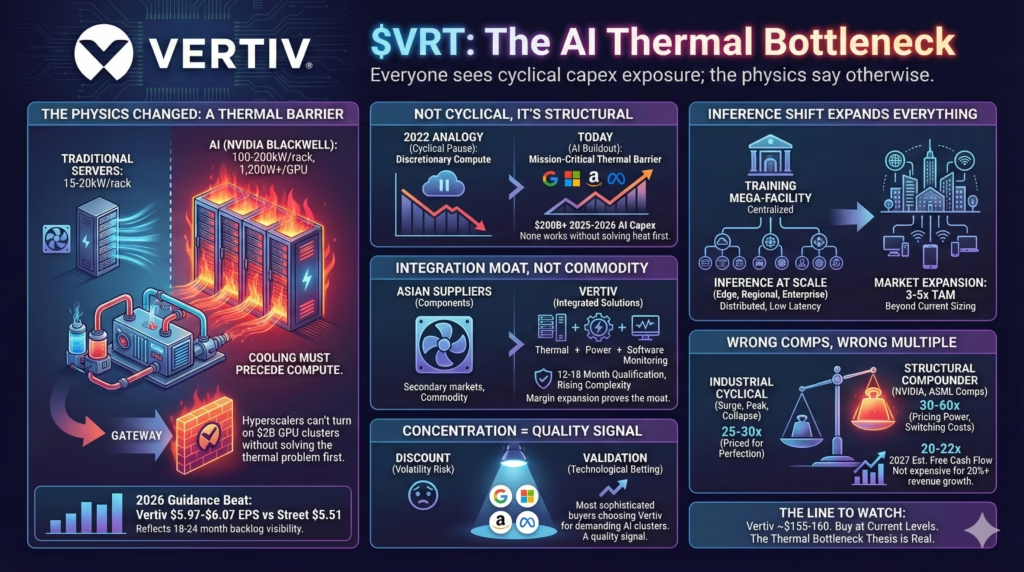

$VRT: the thermal bottleneck for AI

Everyone sees cyclical capex exposure; the physics say otherwise

The Pitch: Vertiv isn’t selling discretionary infrastructure. It’s selling the binding constraint on AI deployment.

The physics changed

NVIDIA’s Blackwell GB200 systems require 1,200W+ per GPU. Rack densities hit 100-200kW versus 15-20kW for traditional servers.

What this means in practice: hyperscalers cannot turn on their $2B GPU clusters without solving the thermal problem first. Cooling spend must precede compute spend, not follow it.

The 2026 guidance beat—management at $5.97-$6.07 EPS versus Street at $5.51—isn’t optimism. It reflects 18-24 month backlog visibility that commodity vendors never see.

Why the 2022 analogy breaks

The bearish reflex treats Vertiv as another cyclical capex play. Surge during buildout, collapse during pause—the pattern that crushed data center infrastructure when Meta pulled back on metaverse spending. Fresh institutional memory.

It’s also wrong.

In 2022, hyperscalers paused incremental capacity for traditional workloads where compute was the constraint. Today’s AI buildout faces a thermal barrier. Microsoft, Google, Amazon, and Meta are planning $200B+ in 2025-2026 AI capex. None of it works without solving heat dissipation first.

Vertiv isn’t selling to discretionary budgets. They’re selling to “we cannot operate” budgets.

The commoditization narrative runs backwards

Bears assume Asian manufacturers will replicate liquid cooling solutions at lower cost as the technology standardizes. This misunderstands how hyperscaler qualification works.

These customers spend 12-18 months qualifying thermal solutions for mission-critical AI infrastructure. Delta and Auras aren’t in Tier 1 qualification pipelines—they serve secondary markets. It’s like worrying Toyota will take share from ASML. Different games.

Integration complexity is rising, not falling. Modern liquid cooling requires integration with power distribution, rack architecture, and building management systems. Vertiv delivers thermal plus power plus software monitoring as integrated solutions. Component suppliers cannot replicate this.

The test: if gross margins expand 100-200 basis points annually despite volume growth, the integration moat is real.

Concentration as quality signal

Consensus applies a concentration discount because heavy reliance on four customers creates volatility risk. Valid if Vertiv sold to discretionary enterprise IT budgets.

Wrong when the customers are the most sophisticated infrastructure buyers in the world betting their competitive futures on AI.

Google, Microsoft, Amazon, and Meta have infinite resources to design cooling in-house or source globally. They’re choosing Vertiv for their most demanding AI clusters. That’s technological validation no analyst report can replicate.

Being concentrated with these four means being concentrated in the fastest-growing infrastructure segment on the planet. Not a bug—a quality signal.

The inference shift expands everything

Consensus focuses on training cluster buildouts. The real demand surge is coming from inference at scale.

Training concentrates in mega-facilities. Inference needs proximity to users for latency—thousands of edge and regional data centers requiring thermal management.

This diversifies Vertiv beyond hyperscaler concentration. Enterprise, telecom, and edge deployments all need AI-capable thermal solutions as inference proliferates. The enterprise segment could see margin expansion as AI inference requires higher-spec cooling.

This tailwind isn’t in current models. It expands Vertiv’s addressable market 3-5x beyond current sizing.

Wrong comps, wrong multiple

At 25-30x forward earnings, the market treats Vertiv as “priced for perfection.” This reflects anchoring on industrial equipment comps during prior capex cycles. The pattern was surge, peak, collapse.

But Vertiv isn’t an industrial equipment company anymore. It’s becoming mission-critical infrastructure in a structural growth market with pricing power and customer captivity.

The appropriate comps: NVIDIA at 30-60x during its AI transition, ASML at 25-35x. When you have pricing power, switching costs, and structural demand growth, traditional industrial multiples don’t apply.

At 20-22x 2027 estimated free cash flow—assuming margins expand as liquid cooling scales—Vertiv isn’t expensive for a structural compounder with 20%+ revenue growth and multi-year demand visibility.